The following is a guest post from Chef’s Director of Product Marketing Julian Dunn.

This year at Sensu Summit, Fletcher Nichol and I gave a talk on systems architecture entitled Pull, don’t push: Architectures for monitoring and configuration in a microservices era. In this post, I’d like to reiterate and expand on some of the concepts in that presentation and make some more concrete recommendations for systems design in an era of complex distributed systems.

In order to talk about good systems architecture, I first thought it would be instructive to examine good physical architecture – that is, buildings. When I think about enduring architectural innovations that resemble today’s distributed computer systems, I can’t help coming back to Montréal’s Habitat 67 residential complex, designed by the Canadian architect Moshe Safdie for Expo 67.

Habitat 67 in Montréal. Source.

Habitat 67 in Montréal. Source.

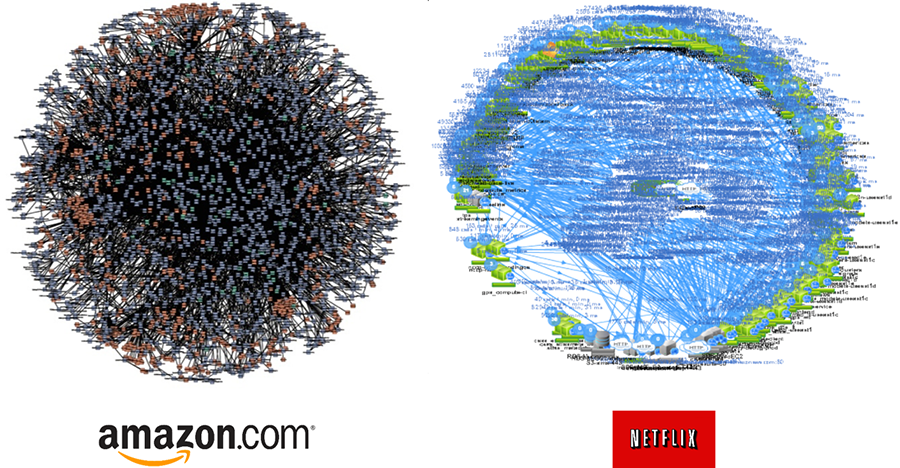

The building celebrated its 50th anniversary just last year, and the characteristics that make it such an iconic structure are the same ones that make good systems architecture enduring and resilient as well. For example, Safdie pioneered the use of modular components (they look like containers) that were repeated and re-used throughout the complex – which is similar to microservices tasked with doing one thing and invoked by many others. Even at a distance, the complex looks a lot like modern microservice architectures of the big web like Amazon or Netflix (below).

Microservices architecture at Amazon and Netflix. Source.

Microservices architecture at Amazon and Netflix. Source.

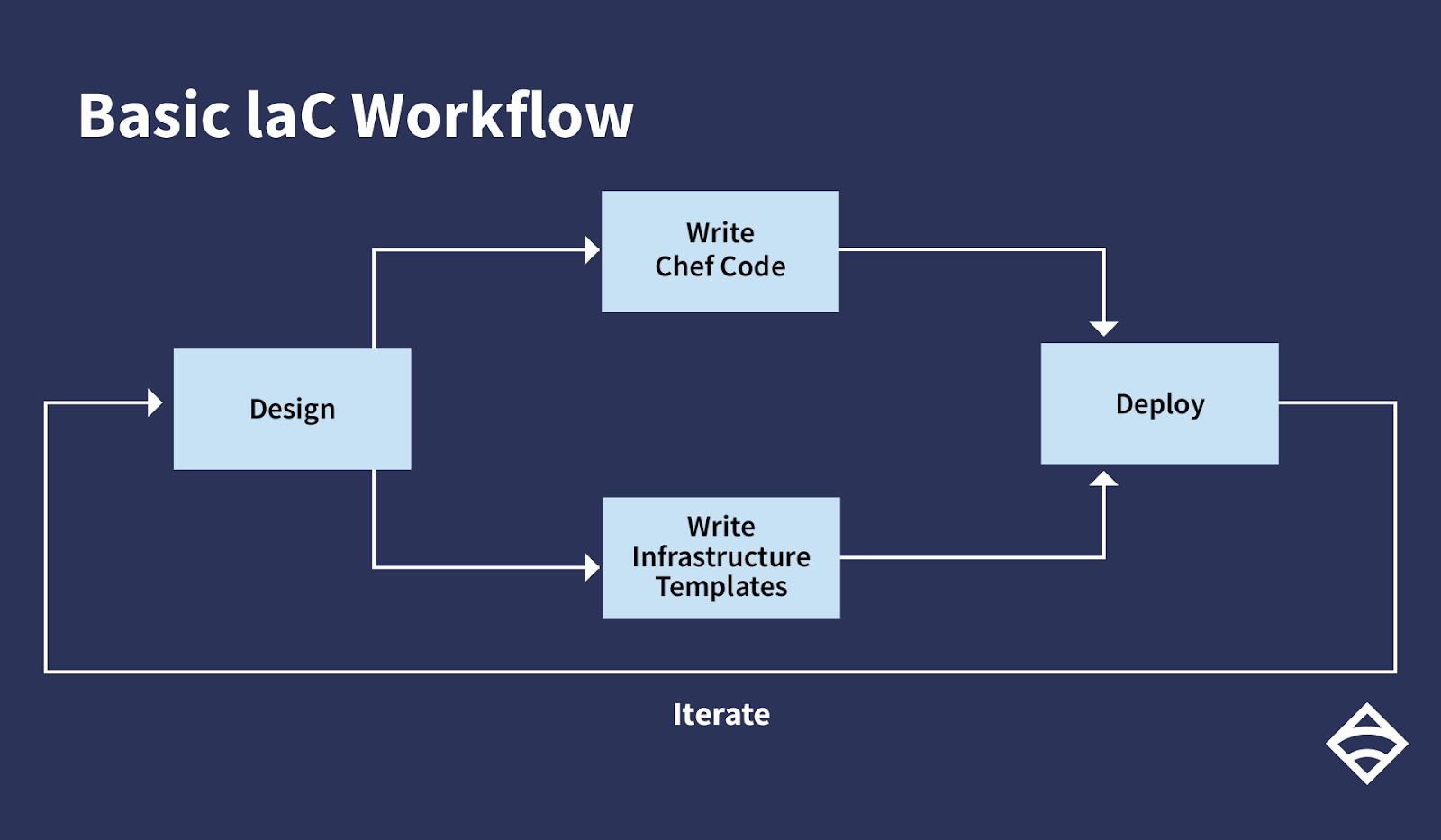

What’s embodied here in both of these architectures – physical and computer – are two main principles:

- Components share no global state;

- The contracts between components are clear, and they are peer-to-peer rather than centralized.

Unfortunately, many modern software architectures do not abide by either of these principles for sound engineering. This is what motivated me to write this talk.

At a sufficiently large scale, management of a large, complex distributed system is the limiting factor to growth, rather than the ability to add or subtract individual services. The marginal cost of new innovation (leading to additional software services) is minimal so long as the cognitive burden to understand the system’s interactions doesn’t drastically increase. This is definitely not the case in centralized system design, also known as orchestration, where components must depend on a single management plane that alone stores the global state and arbitrates how changes are made. If the management plane fails, the entire system is operationally dead.

Even Kubernetes, with a centralized master, has such an architectural shortcoming. Kubernetes has an “out,” however, although this escape valve causes vendor lock-in and hefty managed services fees: an architectural pattern that delegates operational resilience to a centralized management layer has significant financial rewards for the company operating that layer. Today’s pre-Cambrian explosion of managed Kubernetes services (AWS Fargate, Google Kubernetes Engine, Azure Kubernetes Service, etc.) is a direct result of this design: the less your application components are self-aware, the more money the cloud vendor stands to make.

More importantly, however, and something you can’t get away with by outsourcing resilience to even a cloud provider, is your responsibility for operability and comprehension of the system you have built. In a centralized, orchestrated system model, any error with an orchestration flow – something that is intended to run atomically to completion across a fleet of distributed components – simply stops the orchestration and leaves all the broken pieces for a human operator to understand. A centralized model requires that operator to understand the global state of the system in order to even start debugging it, rather than starting with the component that failed and fanning outwards towards the actual error. In the words of Patrick Debois, the father of DevOps: “Microservices: the code needs to fit in the dev’s head. But will the deployment/architecture still fit in ops’ head?”

Rather than a centralized or orchestrated system design, we recommend instead the adoption of the distributed autonomous actor model. Autonomous actors, first popularized by Mark Burgess in the design of convergent configuration management systems, declare a promised desired state and autonomously make progress towards that state. They expose interfaces to allow others to verify promises and can also promise to take certain behaviors in the failures of others. Even if you are purely using containers and microservices and have no use for traditional server-based configuration management, the principles still hold: a promise-based, eventually consistent autonomous actor model leads to higher understanding, better resilience against local failure, and improved recovery from local failures.

It’s no accident that both Sensu and Habitat have a similar design based on the autonomous actor model. Sensu, in particular, flips the architecture of a monitoring system on its head. Traditional monitoring systems made in the pre-cloud era, like Nagios, use a centralized master that both periodically polls the endpoints being monitored and stores state. Lose that master, and the entire monitoring system is non-functional. Sensu, on the other hand, puts most of the logic and responsibility for monitoring at the edge, on the endpoints being monitored. The central data collection cluster, or backend, merely serves as an ingest and transit point for that data. Lose a backend, and events will just queue until a new one is available.

To demonstrate how Sensu and Habitat have a similar architecture design and therefore are a natural complement to one another, we, together with Chef partner Indellient, packaged Sensu in Habitat and showed that off at Sensu Summit. Using the Habitat runtime system in this way accrues several benefits to a Sensu deployment:

- Backends and monitored nodes can be brought up in any order and the system will automatically converge

- Not all backends need to be available for monitored nodes to start sending data

- Replacement of backend nodes (either intentional or unintentional due to unplanned outages) becomes a trivial event, with this updated state automatically available to monitored nodes

To sum up, the rise of microservice architectures and massively distributed systems is going to require new patterns for software delivery, management, and deployment. If we can take one lesson from real-world architecture, it’s that centralized, orchestration-oriented approaches are no longer going to scale — either technically or cognitively.