You can’t have scalability without stability

This post was originally published in The New Stack.

Solutions for challenging technical problems shouldn’t result in a whole set of new ones. Sometimes, we make things harder on ourselves by choosing the new hotness to tackle technical problems (such as scaling infrastructure). We may be solving our problem in an interesting and fun way, but we bring on more complexity (and more problems) as a result of that technology choice.

I put out a few tweets a while back showing my true curmudgeon colors (AKA, a grumpy operator reminiscing about the good old days). And while initially the tweets were voiced in frustration over a technical issue I was grappling with, the sentiment remains true: we need a resurgence of boring tech (and I’m not the first to say it).

At the risk of truly proving myself to be that grumpy operator, there’s a case to be made for going back to the old — but tested, tried, and true — software of yesteryear. In this post, I make my case, drawing from one of my favorite examples: distributed versus relational databases.

Distributed databases: the good (and not so good)

Etcd 2.0 (its first stable release) came on the scene in January 2015 and started growing in popularity, with Cloud Foundry and Kubernetes driving significant adoption. Distributed databases like etcd are great for high availability, which they offer through replication. With multiple copies of the same piece of data on multiple machines, you can rest assured that data will always be available at a moment’s notice.

But, the problem with distributed systems is there are many more moving parts (not to mention problems due to their susceptibility to network partitions and slow members). As such, the complexity goes up. Being an operator trying to reason about the system becomes all the more difficult. There are instances in which a distributed database won’t perform nearly as well as its predecessor (more on that later!), as with many of them you need some form of consensus. Every node in your cluster needs to agree on what the value might be for a particular key. So now, not only do you have additional overhead but also the chance for conflict, for which you need conflict resolution.

The thing about distributed databases — or really any technology — is it has to fit your data needs. The data you put in it and how you’re going to access it has to fit a certain mold. Forcing anything upon this technology is foolish.

As with many technical problems, they grow exponentially at scale. For instance, if you’re abusing a distributed key-value store or database for something it wasn’t designed for, it’s going to be very problematic. At scale, you simply dump more gasoline on the fire — your problems get amplified and outages increase.

At certain levels, doubling the size of your infrastructure isn’t a big deal — i.e., going from 100 to 200 servers. If you’re at 10,000 servers and double, that’s another story. The stresses on the system are more significant and can hit you in an instant.

Relational databases: the overlooked workhorse

On the other end of the spectrum, we have relational databases like Postgres, which comes with a decade-plus of production use and hardening (the release of 6.0 was in January 1997), with a number of industry experts that know its ins and outs. As a relational database, Postgres offers strong consistency and durability — it’s an all-around robust and proven solution.

Returning to the problem I posed earlier about the often-instantaneous stresses of scale — you can hammer a technology like Postgres with a big surge of data and it will be able to absorb that sudden influx of information. While you may see a temporary performance degradation, you’ll eventually dig yourself out due to the very fact that Postgres is a well-matured technology and it’s not distributed.

Although relational databases fell out of fashion with the rise of cloud, they’re still incredibly powerful, with a robust and capable interface (SQL, in the case of Postgres). And because Postgres and its ilk fell out of fashion, there’s a whole generation of operators and developers who have never experienced it. When it comes to solving problems in cloud environments, distributed databases and hosted cloud database services are seen as the only solution.

As we well know, the cloud isn’t going anywhere anytime soon, and relational databases are not (understandably) designed for cloud environments. What makes Postgres great — the fact that it’s not distributed — is also where it falls short in terms of cloud. The strong consistency and durability you achieve with a relational database come at the cost of high availability. Said another way, Postgres is easy to scale up, but hard to scale out. If you want Postgres machines to do more and go faster, you’ll have to give them a beefier system, which translates to a costly single point of failure. Distributed databases, on the other hand, can easily scale out, running on several sub-par machines instead of one really good one.

If you’re going to operate traditional technology like Postgres in the cloud (and you absolutely should — more on that later), you have to account for high availability. Are you provisioning enough of a machine to handle your workloads? Are you accounting for backups? Luckily, cloud providers have already stepped up to the challenge.

Relational databases in the cloud

The good news is that major cloud providers like AWS are providing a hosted Postgres — essentially a relational database service (RDS), which takes care of provisioning, managing, backing up, replicating, and failing over of these matured technologies. They’ve cloud-ified these technologies.

Much like the grumpy operator, cloud providers like AWS understand both the value of these old technologies and the fact that many enterprises require a relational database with a SQL interface. In order to facilitate these enterprises moving into the cloud, vendors like AWS are providing these symmetrical services in order to forklift workloads into the cloud. So, not only do they recognize good tech, they also know that they’re not going to get many customers if they require users to rewrite their applications in order to migrate to the cloud.

Keeping with our AWS example, they have all sorts of automated magic to run Postgres in the cloud. You can click a few buttons and stand up a Postgres instance with one or more hot standbys. Plus there’s automated maintenance windows, backups, and snapshots. Why would you use a distributed database when you have that service at your fingertips?

There’s another huge benefit to using a relational database with a cloud provider: no vendor lock-in. If you’re using something like Postgres, you can simply go to Google, Azure, or any other cloud vendor that provides a similar service (or you could run your own Postgres). When using distributed databases with cloud providers, you’re locked in if it’s their hosted solution. In these cases, it’s their proprietary tech and interfaces — few (or none) offer an out-of-the-box regular technology. There’s no hosted etcd anywhere, most likely because it would be too hard for cloud providers to operate and support their users at scale.

Filling the missing link between old and new

All this is not to say that we need to abandon all modern ways in favor of traditional tech. There does exist a happy medium where we can take advantage of the strengths of both old and new.

To again return to my favorite example, Postgres is a super robust relational database with a SQL interface, but its weak spot is high availability. On the other hand, you have etcd, which is a fantastic, highly available key-value store with strong consensus, great for configuration that doesn’t change all that often, but falls short in terms of scalability. Fortunately, there are solutions that merge these two technologies to create the best of both worlds. Patroni, for example, uses Python and etcd to auto-cluster Postgres. It uses etcd to create a replication management system to say, this Postgres is the leader, everyone read and write from the leaders. These other ones are standbys. In the event of the leader becoming unavailable, Patroni selects a hot standby as a new leader.

Tools like Patroni let you use these old and new technologies for what they’re good for. It’s a neat solution that fills the missing link between old and new, enabling you to use new tech like Kubernetes alongside the great tech from yesteryear, like Postgres.

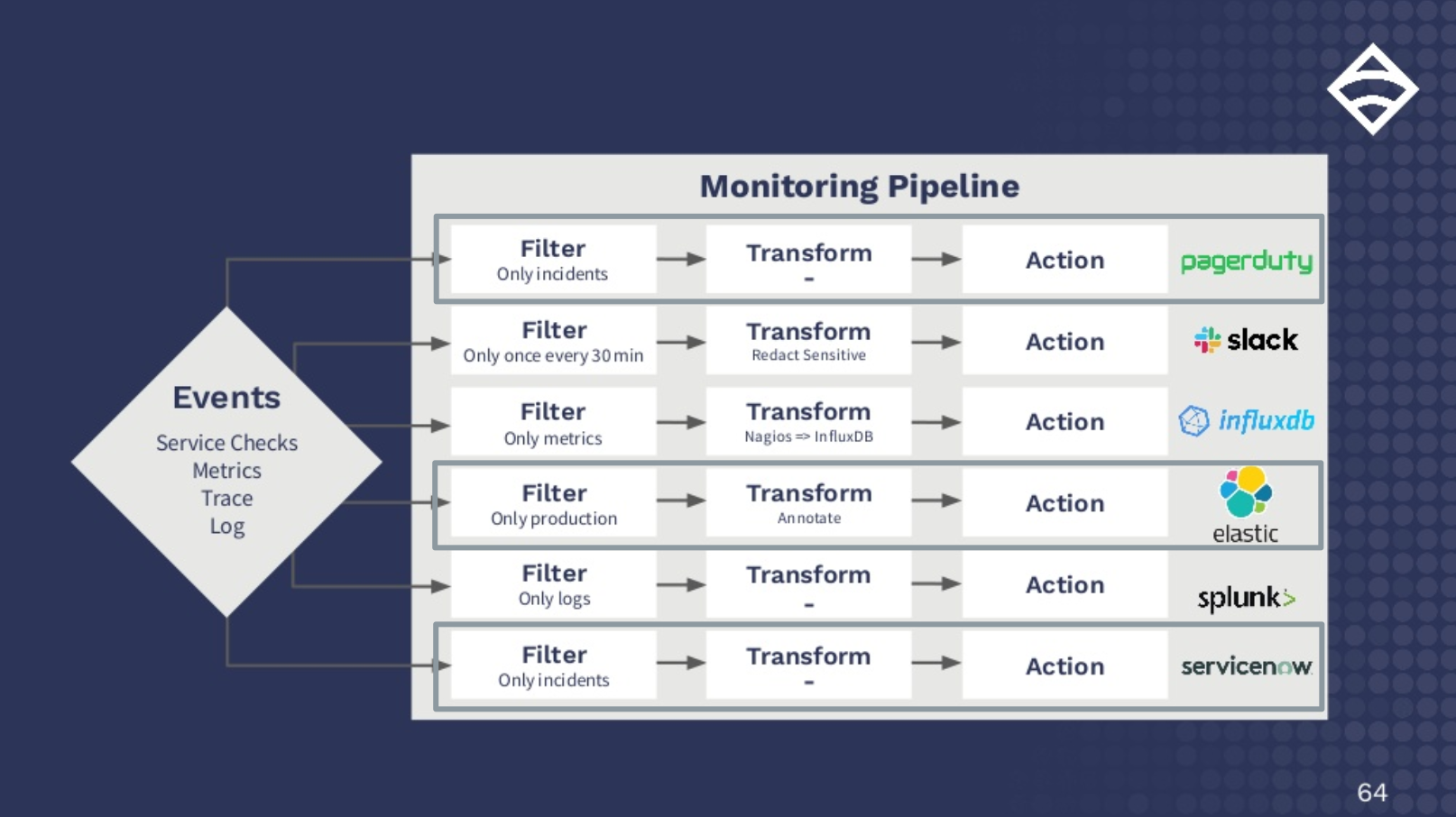

We’ve taken a similar approach when tackling challenges around scaling Sensu Go. We use etcd for Sensu configuration and cluster orchestration. We’ve made it an option to move event storage to Postgres, which — in addition to improving performance by 10x — opens up new functionality around things like longer-term history and report generation. A pure etcd deployment gives new users quick time to value in terms of understanding how Sensu works, while Postgres gives them a stable foundation to scale their deployment when they’re ready. Even with Postgres for event storage, we’re still using etcd for configuration and cluster orchestration. (For the full story on the technical decisions and lessons learned when scaling Sensu Go, check out my blog post.)

The point is, we need to embrace the old along with the new. Time — and technology — keep marching forward, whether we like it or not.

Have your own hot takes on “boring tech?” Let us know — join our Community Forum, below!